3 Dimensional Reconstruction from Monocular Image Sequences

Description

Image driven environment perception is one of the main research topics in the field of autonomous robot applications. The thesis of Sascha Jockel invastigated and implemented an image based three dimensional reconstruction system for such robot applications in case of daily table scenarios. Perception is made at two spatial-temporal varying positions by a micro-head camera mounted on a six-degree-of-freedom robot-arm of our service-robot TASER. Via user interaction the epipolar geometry and fundamentalmatrix will be calculated by selecting 10 corresponding corners in both input images predicted by a Harris-corner-detector. The images then will be rectified by the calculated fundamentalmatrix to bring corresponding scanlines together on the same vertical image coordinates. Afterwards a stereocorrespondence is made by a fast Birchfield algorithm that provides a 2.5 dimensional depth map of the scene. Based on the depth map a three dimensional textured point-cloud will be presented as interactive OpenGL scene model.

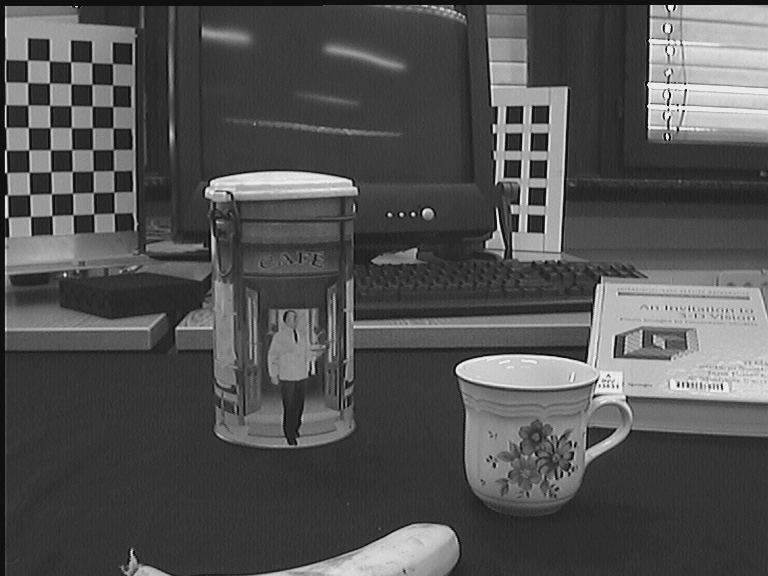

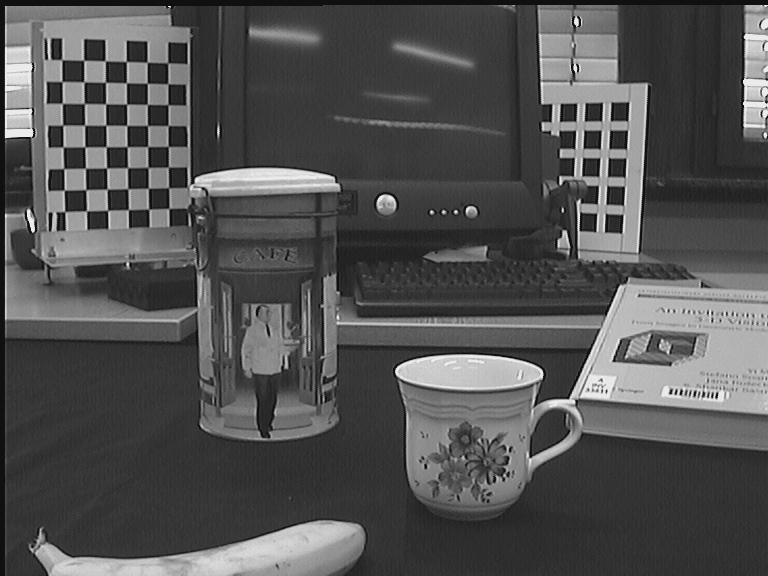

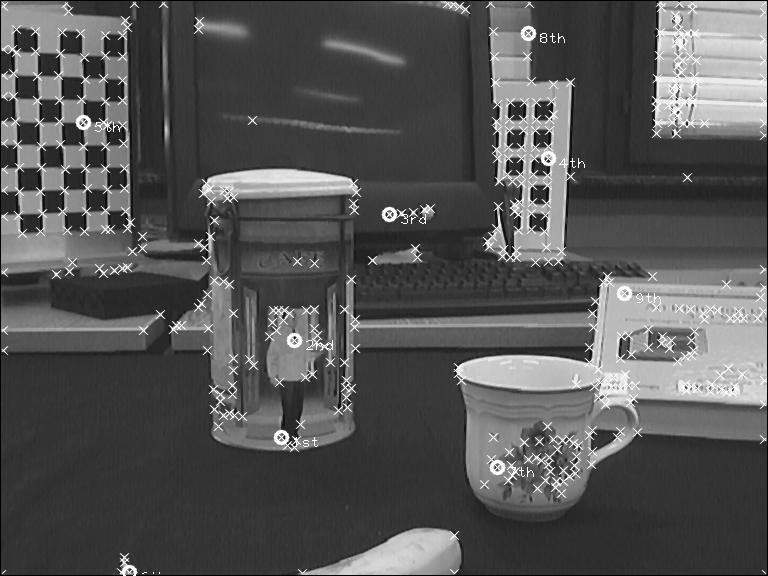

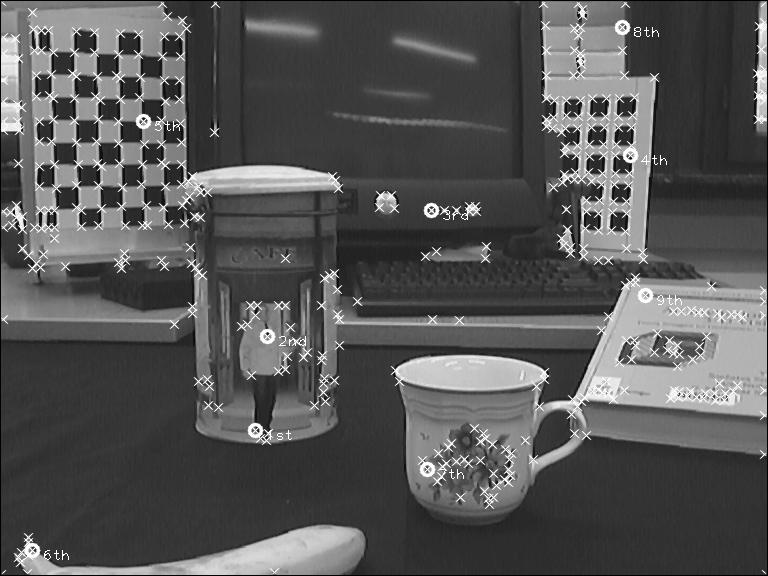

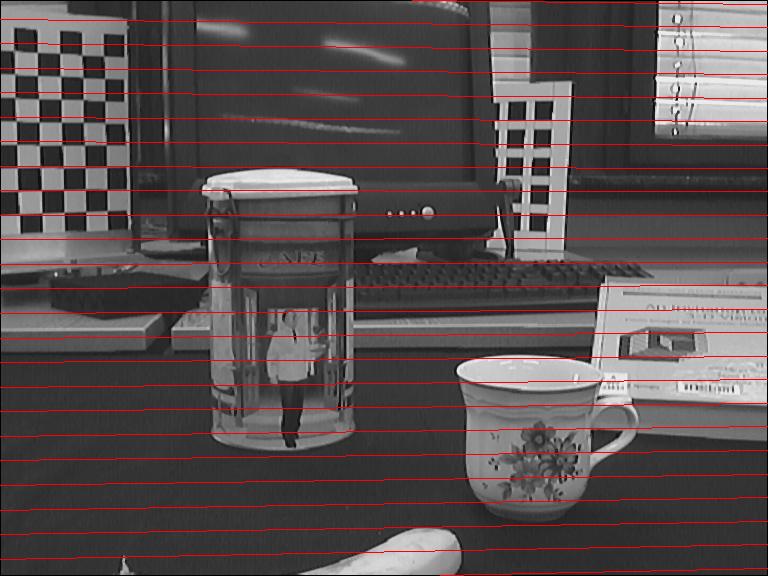

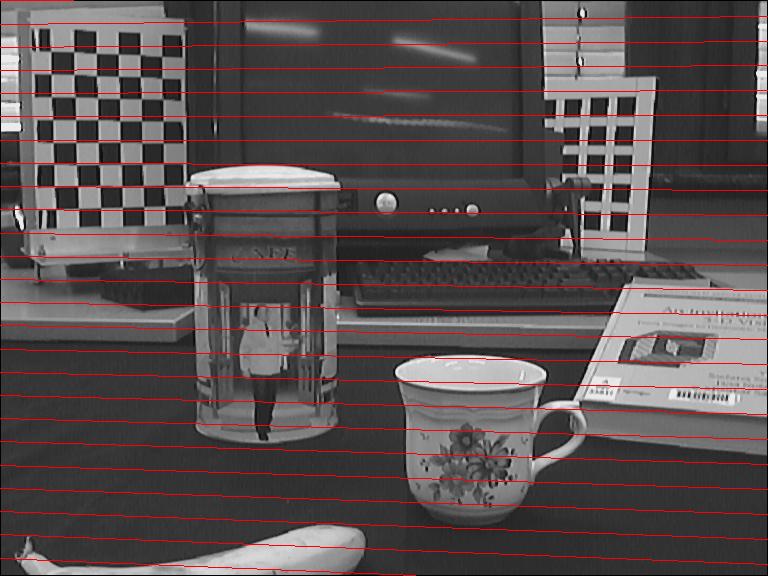

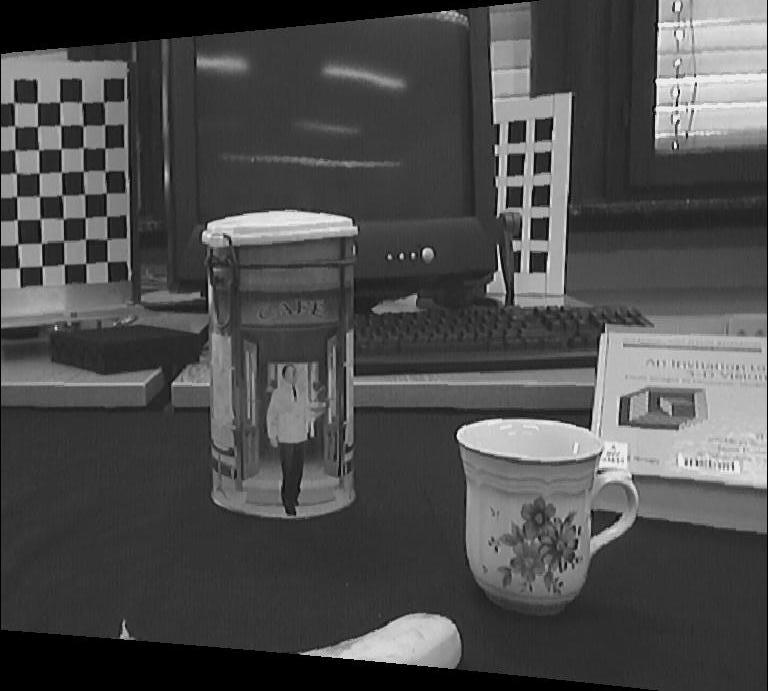

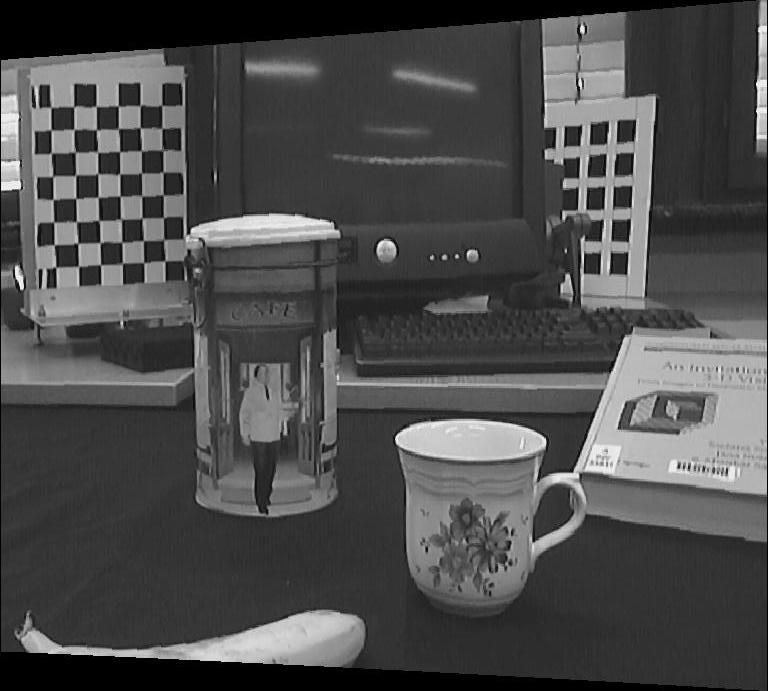

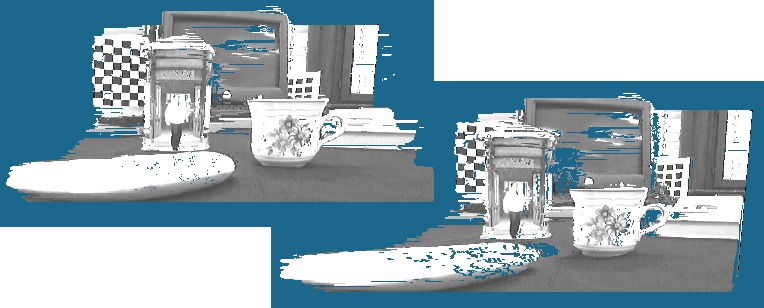

The pictures below demonstrate different steps of the reconstruction system. The pictures of the first row are the original captured images. In the second row the results of the corner detection algorithm is presented and the user has to choose few corresponding points in both images. The third row shows the rectified images and in the fourth row these image is the resulting depth map. The depth map is used to compute the depth of each pixel and the resulting 3 dimensional model is presented in the last row.

Images

| Input images |  |

|

| Feature extraction |  |

|

| Epipolar lines |  |

|

| Rectified images |  |

|

| Depth image |  |

| Few 3 dim. views |  |

Further Informations

- Denis Klimentjew,

Andre Stroh,

Sascha Jockel,

Jianwei Zhang

"Real-Time 3D Environment Perception: An Application for Small Humanoid Robots",

In Proceedings of the 2008 IEEE International Conference on

Robotics and Biomimetics (ROBIO), Bangkok, Thailand, February 21-26, 2009, pp. 354-359.

Paper: [TAMS server], ISBN: 978-1-4244-2679-9

- Sascha Jockel,

Tim Baier-L÷wenstein,

Jianwei Zhang

"Three-Dimensional Monocular Scene Reconstruction for Service-Robots: An Application",

In Proceedings of VISAPP 2007 - Second International Conference on Computer Vision Theory and Applications, Vol. Special Sessions, Barcelona, Spain, 2007 March 8-11, INSTICC Press, pp. 41-46.

Paper: [TAMS server (2.4 Mb)]

- Sascha Jockel

"3-dimensionale Rekonstruktion einer Tischszene aus monokularen Handkamera-Bildsequenzen im Kontext autonomer Serviceroboter",

Diplomarbeit, Juni 2006, Erstbetreuer: J. Zhang,

Zweitbetreuer: W. Hansmann.

Diploma thesis: [TAMS server (7.2 Mb)]