Datasets

This page provides datasets from robotic experiments our group worked with. We report the paper(s) that use the respective datasets. You may freely use the data, but you are required to cite the corresponding paper where the dataset is originally introduced.

Where datasets are based on publicly available datasets by other researchers, we redirect to their page. If you're using data from these authors, you may cite their paper. For such datasets, some pre-processing may have been made in order to be employed with our algorithms. In this case, if you used the modified dataset, please cite both our paper and their original paper.

Our datasets are licensed under a Creative Commons Attribution-Noncommercial-Share Alike 3.0 Unported License. The datasets are distributed WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE.

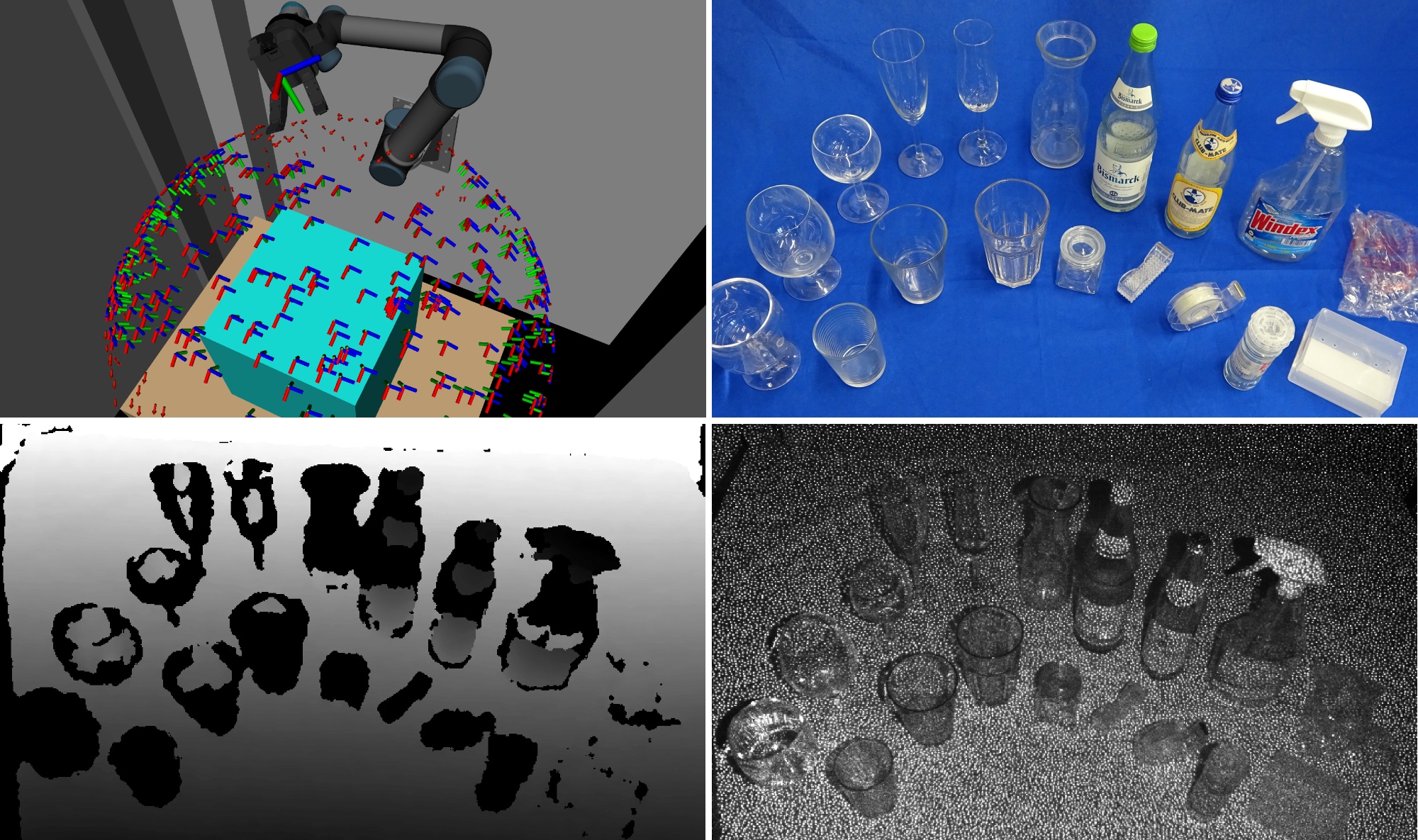

Detection and Reconstruction of Transparent Objects with Infrared Projection-based RGB-D Cameras

The dataset accompanying our publication ``Detection and Reconstruction of Transparent Objects with Infrared Projection-based RGB-D Cameras'' (ICCSIP 2020). We recorded various transparent and partially transparent objects with an Orbec Astra camera mounted to a UR5 robotic arm setup. Prominently, the IR image channel was recorded and evaluated in the associated paper. Around 100 randomly distributed observation poses were recorded for each object.

PointNetGPD grasps dataset

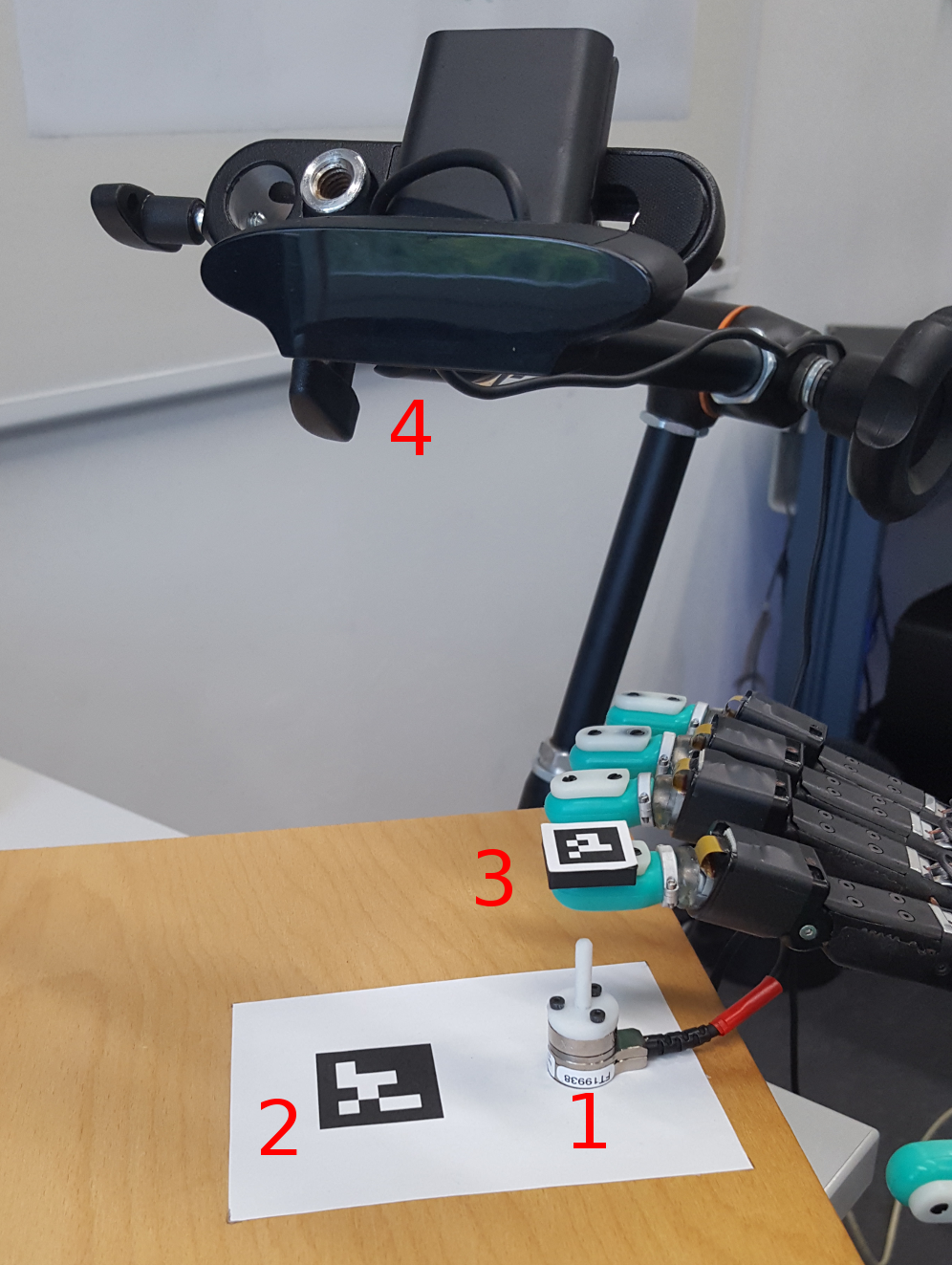

PointNetGPD (ICRA 2019) is an end-to-end grasp evaluation model to address the challenging problem of localizing robot grasp configurations directly from the point cloud. PointNetGPD is light-weighted and can directly process the 3D point cloud that locates within the gripper for grasp evaluation. Taking the raw point cloud as input, our proposed grasp evaluation network can capture the complex geometric structure of the contact area between the gripper and the object even if the point cloud is very sparse. To further improve our proposed model, we generate a larger-scale grasp dataset with 350k real point cloud and grasps with the YCB objects Dataset for training. [Code and usage] [Download dataset here]Biotac Single Contact Response

ROS bag recordings that explore the responses of a BioTac© sensor while it is repeatedly being pushed onto a single stick contacting at different points. The recording encompasses the sensor response, the readings of a force-torque sensor on which the test stick is mounted, estimated 3d positions of the biotac w.r.t. the tip of the stick and recorded image data. Additionally estimated (unrefined) points of contact on the biotac surface were computed and are added as RViz markers. Download Here

|

|

License

Creative Commons Attribution 4.0 LicenseReferences

- Philipp Ruppel, Yannick Jonetzko, Michael Görner, Norman Hendrich and Jianwei Zhang, Simulation of the SynTouch BioTac Sensor, The 15th International Conference on Intelligent Autonomous Systems, IAS-15 2018, Baden Baden, Germany.

Multi Visual Features Fusion for Object Affordance Recognition

The data was collected by Jinpeng Mi at the Department of Informatics, University of Hamburg. This dataset is used for learning objects affordances, e.g., calling, drinking(I), drinking(II), eating(I), eating(II), playing, reading, writing, cleaning and cooking. The dataset can be downloaded at [multi visual features fusion for object affordance recognition].License

Creative Commons Attribution-Noncommercial-Share Alike 4.0 LicenseTAMS Office

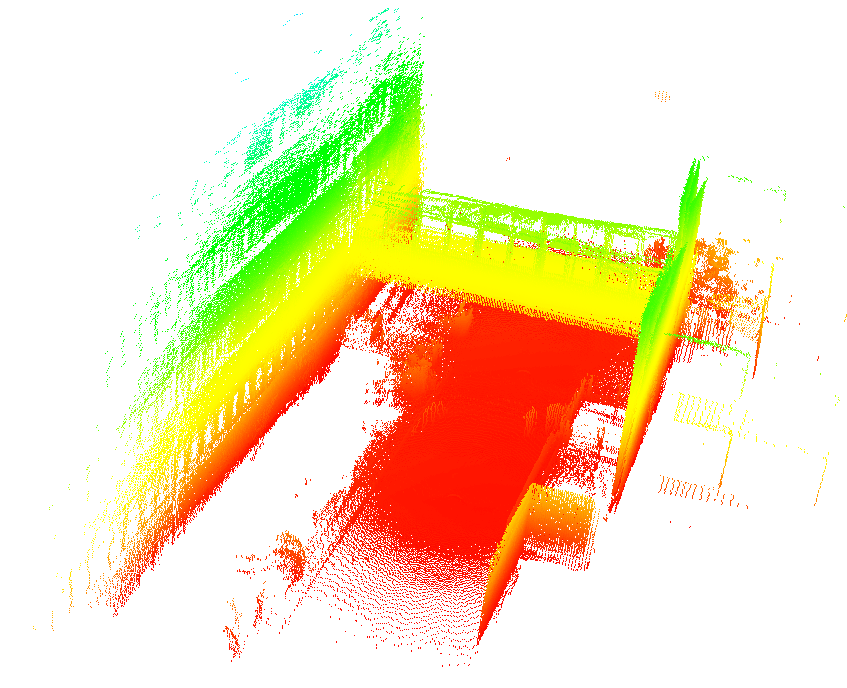

The dataset was gathered using our customized 3D scanner at diffierent positions on a floor that has a kitchen, a robot laboratory and several offices, including 40 point clouds. The organized point clouds can be downloaded at [organized point clouds]. The point clouds are stored as PCD file, please refer to the point cloud library for the information of the file format.License

Creative Commons Attribution-Noncommercial-Share Alike 4.0 LicenseReferences

- Xiao, J., Zhang J.H., Adler, B., Zhang, H., and Zhang J.W. (2013). Three-dimensional Point Cloud Plane Segmentation in Both Structured and Unstructured Environments. Robotics and Autonomous Systems, revision under review.

Informatikum Bridge

The dataset was gathered at the Department of Informatics, University of Hamburg. The pan resolution is set to 0.5 degree and the 2D scan resolution is 0.25 degree, thus construct organized point clouds with 720 rows and 540 columns, in total 388,800 points. The "bridge" area between two buildings has been chosen as the scenario, a side view photo of the area is shown in Figure 3. The organized point clouds can be downloaded at [organized point clouds]. The point clouds are stored as PCD file, please refer to the point cloud library for the information of the file format.

|

|

Figure 3: left: A photo of the "bridge" area; right: the map built with our planar segment based registration.

License

Creative Commons Attribution-Noncommercial-Share Alike 4.0 LicenseReferences

- Xiao, J., Adler, B., and Zhang, H. (2012). 3D point cloud registration based on planar surfaces. In IEEE International Conference on Multisensor Fusion and Information Integration, pages 40-45, Hamburg, Germany.

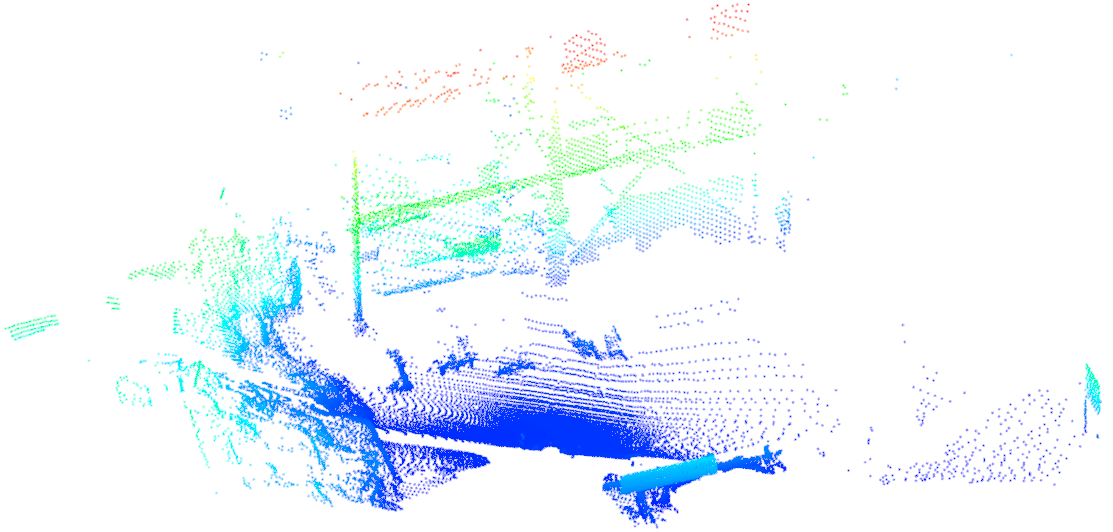

Collapsed Car Parking Lot

This dataset was collected by Pathak et al. (2010) during the 2008 NIST1 Response Robot Evaluation Exercise at Disaster City, Texas. The raw data are available at [Jacobs Robotics]. The data were gathered by a track robot equipped with an aLRF; the aLRF is based on a SICK S 300 which has a horizontal FoV of 270 of 541 beams. The sensor is pitched from -90 to +90 at a spacing of 0.5 deg, which leads to an organized point cloud of 541×361=195,301 points per sample. Range images, by aligning each scan slice as a row, have been provided in the dataset. However, when they are sampled, all scan slices in each point cloud intersect at two points on the rotation axis since the LRF's FoV is larger than 180 deg, resulting in intersection when projecting spatial points to range image and vise versa, see Xiao et al. (2013). In order to apply range image based algorithms to this dataset, we have reordered the range images, see Figure 2 where a range image is shown with both before and after being reordered. The reordered organized point clouds can be downloaded at [reordered pcd]. The point clouds are stored as PCD file, please refer to the point cloud library for the information of the file format.

|

|

|

| (a) | (b) | (c) |

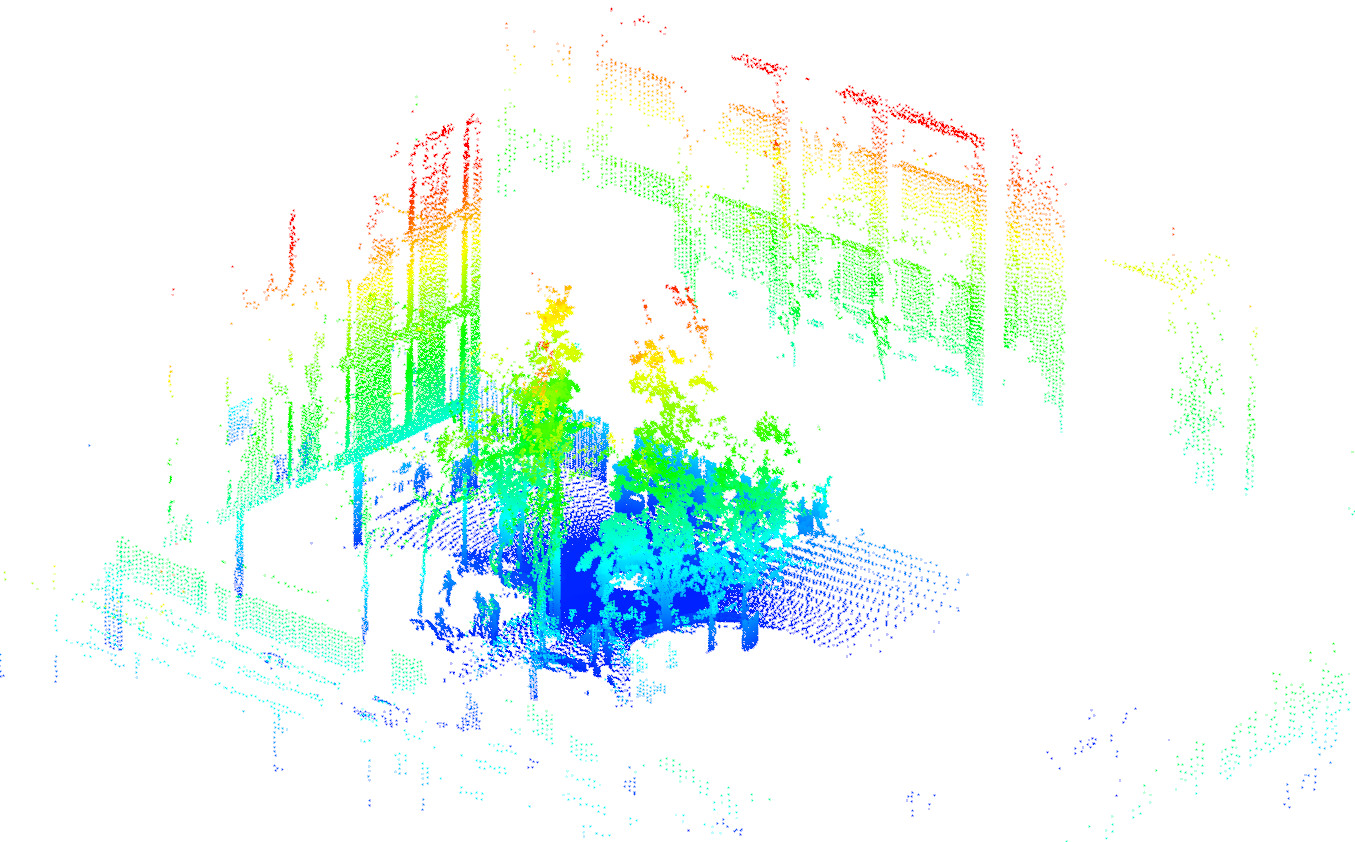

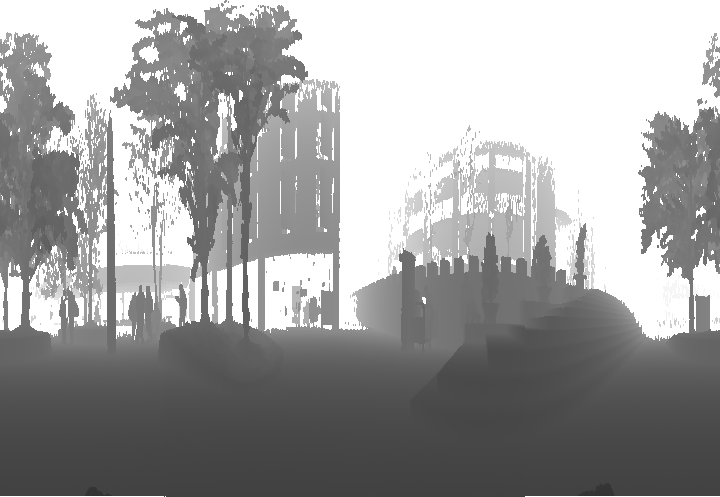

Figure 2: Scan 8 in the Collpased Car Parking Lot. (a): as point cloud which is colored by height; (b): as range image by aligning each scan slice as a row; (c): as range image after reordering the points. For the range images. Note the diffence between (b) and (c).

License

Creative Commons Attribution-Noncommercial-Share Alike 4.0 LicenseReferences

- Pathak, K., Birk, A., Vaskevicius, N., Pfingsthorn, M., Schwertfeger, S., and Poppinga, J. (2010). Online three-dimensional slam by registration of large planar surface segments and closed-form pose-graph relaxation. Journal of Field Robotics, 27(1):52-84.

- Xiao J., Adler B., Zhang H., and Zhang J. (2013). Planar Segment Based Threedimensional Point Cloud Registration in Outdoor Environments. Journal of Field Robotics, 30(4):552-582.

- Xiao J. (2013). Planar Segments Based Three-dimensional Robotic Mapping in Outdoor Environments. PhD thesis, University of Hamburg.

Barcelona Robot Lab Dataset

The dataset covers about 10,000 sq m of the UPC Nord Campusin Barcelona, which is intended for use in mobile robotics and computer vision research. It is available at [Barcelona Robot Lab Dataset]. In order to apply algorithms which require organized point clouds, the points in each scan have been reordered according to their spherical coordinates. The organized point clouds can be downloaded at [organized point clouds]. The point clouds are stored as PCD file, please refer to the point cloud library for the information of the file format.

|

|

Figure 1: scan 5 as point cloud and gray scale range image.

License

Creative Commons Attribution-Noncommercial-Share Alike 4.0 LicenseReferences

- Xiao J., Adler B., Zhang H., and Zhang J. (2013). Planar Segment Based Threedimensional Point Cloud Registration in Outdoor Environments. Journal of Field Robotics, 30(4):552-582.

- Xiao J. (2013). Planar Segments Based Three-dimensional Robotic Mapping in Outdoor Environments. PhD thesis, University of Hamburg.

Object Affordance Recognition for HRI

The data was collected by Jinpeng Mi at the Department of Informatics, University of Hamburg. This dataset is used for learning the human-centred object affordance, e.g., call-able, drinkable, edible, playable, readable, wirte-able, and cleanable. The dataset is composed of 42 objects are commonly used in household. The dataset can be downloaded at [object affordance recognition for HRI].License

Creative Commons Attribution-Noncommercial-Share Alike 4.0 LicenseReferences

- J. Mi, S. Tang, Z. Deng, M. Görner, and J. Zhang. Object Affordance based Multimodal Fusion for Natural Human-Robot Interaction. Cognitive Systems Research, 2018. doi: 10.1016/j.cogsys.2018.12.010.